Using ChatGPT as a recommender system: A case study of multiple product domains

Authors: Xianglin ZHAO, Li CHEN, Yucheng JIN

Department of Computer Science, Hong Kong Baptist University

Since OpenAI launched ChatGPT late last year, this model has attracted widespread interest from academia and industry, with 100 million monthly active users as of January [1]. As a Large Language Model (LLM), ChatGPT has demonstrated impressive natural language processing capabilities, including logical reasoning, question answering, and machine translation. While ChatGPT is known for providing high-quality conversations, we are still curious whether it can serve as a competent Conversational Recommender System (CRS) [2] for providing personalized recommendations to users. To explore this question, we conducted a case study in three typical domains: entertainment (music), high-cost (smartphone), and service (travel), with the goal of examining ChatGPT's recommendation capabilities.

Entertainment Items: Music Recommendation

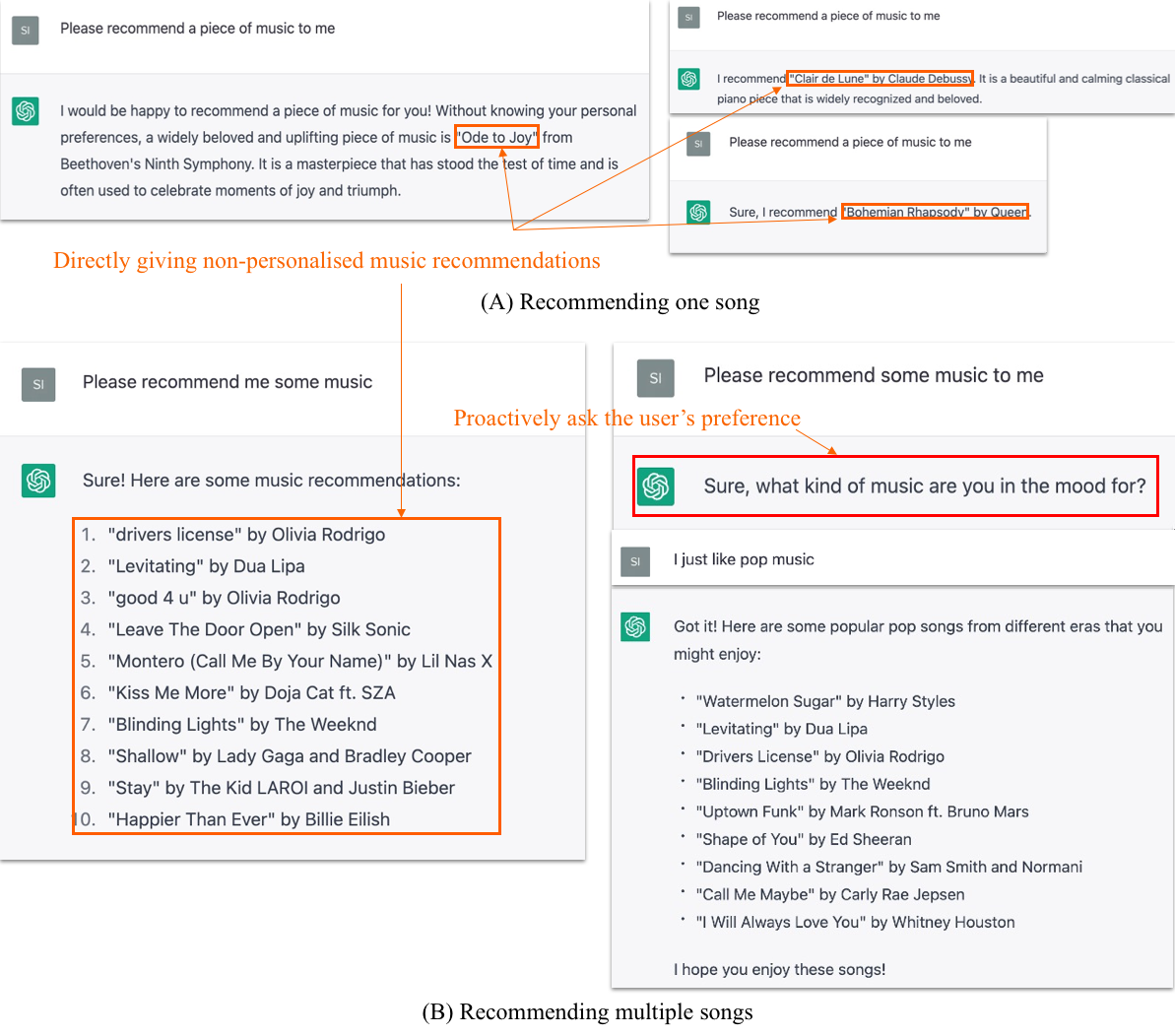

ChatGPT can provide personalized music recommendations based on the users’ needs, allowing them to refine their preferences through multiple-turn conversations. The interaction initiative is typically user-driven (i.e., the user asks, and the system answers). For example, we asked ChatGPT: Please recommend a piece of music to me (Figure 1 (A)). or Please recommend some music to me (Figure 1 (B)). Responses are a bit diverse and randomly generated, and the initial recommendation strategy is not personalized and mainly based on item popularity. In one instance (as shown in Figure 1), ChatGPT proactively asked the user a question, “what kind of music are you in the mood for?”

Figure 1: The responses of ChatGPT when the user asks for a recommendation of a piece of music or recommendations of some music.

Figure 1: The responses of ChatGPT when the user asks for a recommendation of a piece of music or recommendations of some music.

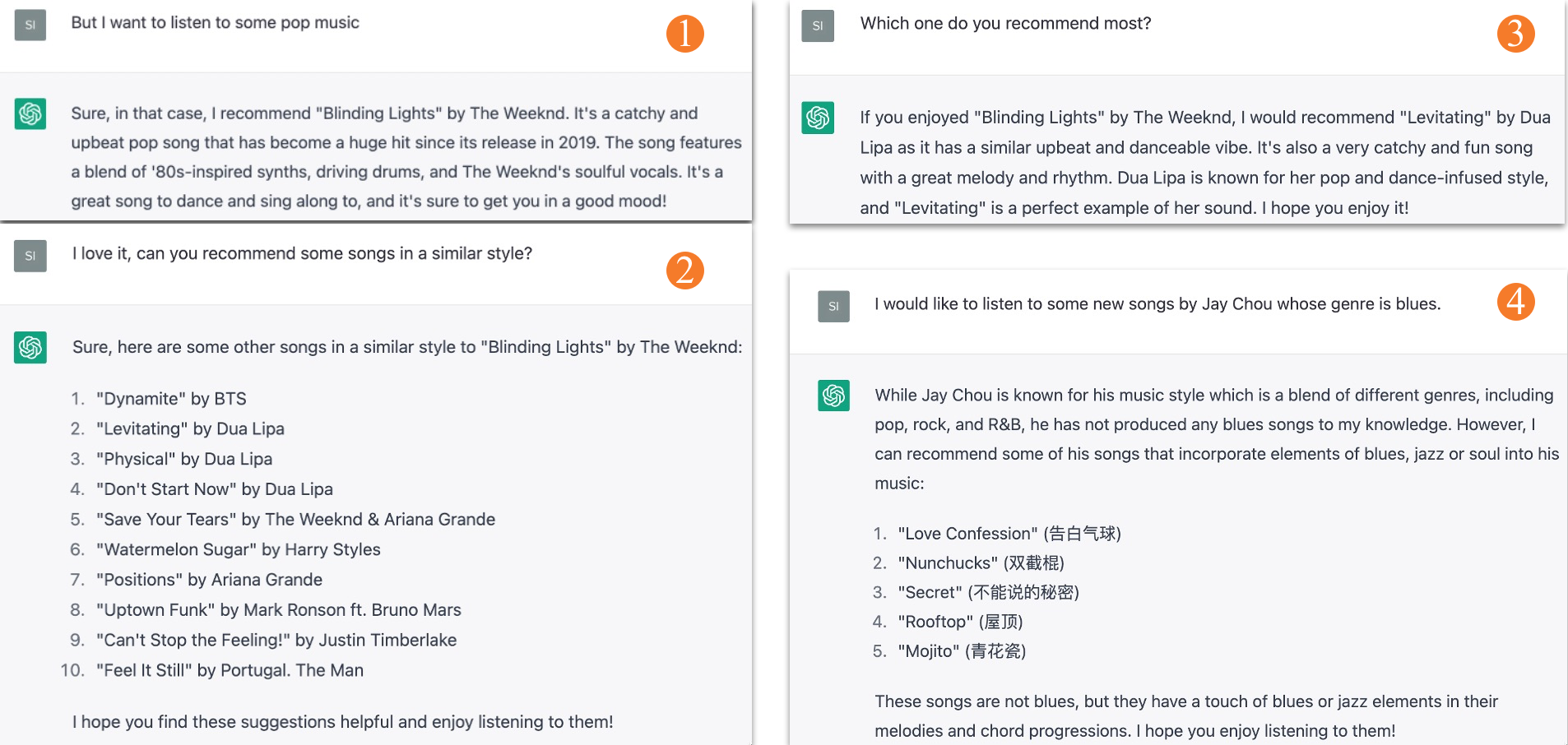

ChatGPT allows users to specify their needs incrementally for generating more personalized recommendations in the following turns. Figure 2 illustrates an example that how we (as users) provided more music preferences for obtaining personalized recommendations.

Figure 2: The user incrementally specifies her further needs for obtaining personalized recommendations.

Figure 2: The user incrementally specifies her further needs for obtaining personalized recommendations.

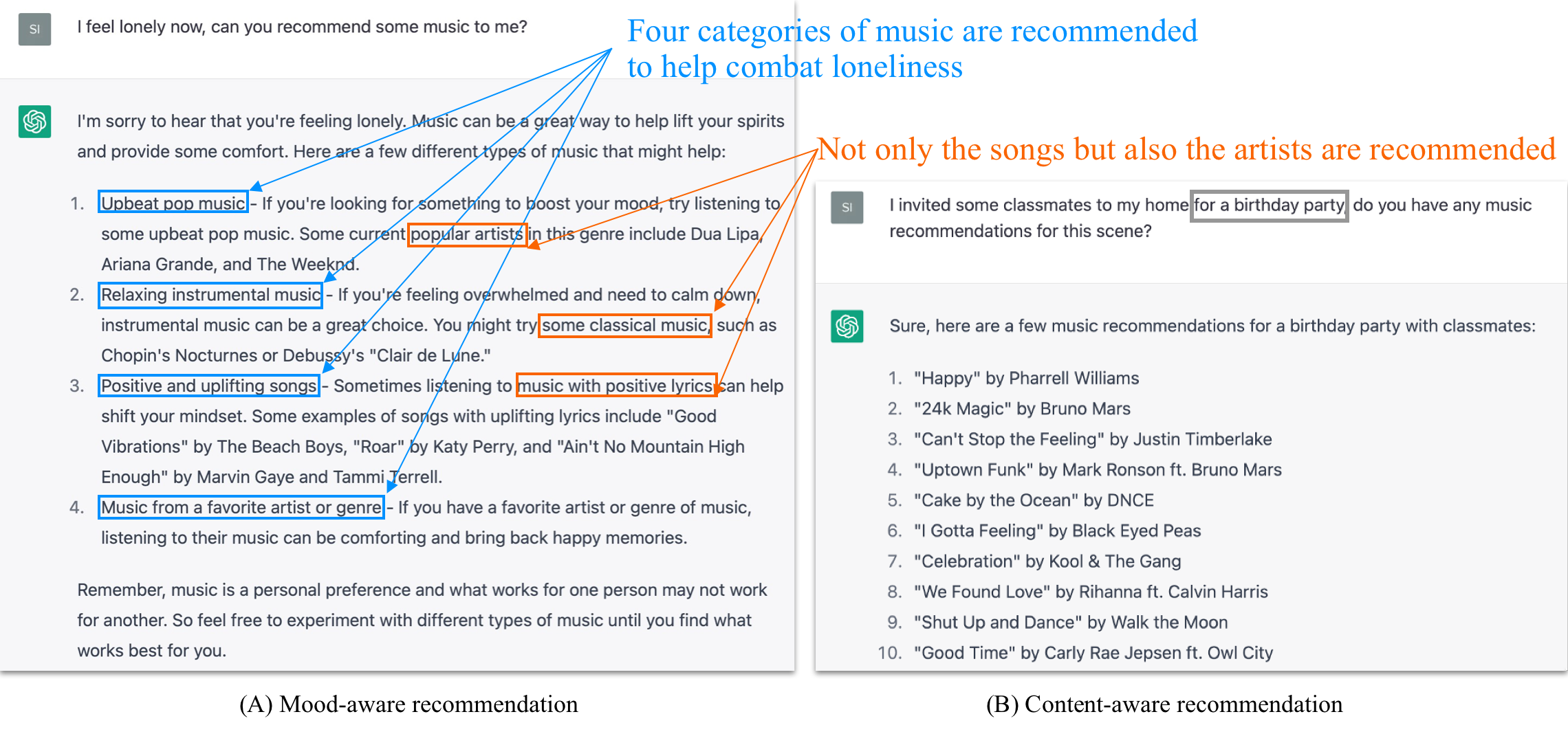

Furthermore, ChatGPT is capable of performing relatively complex recommendation tasks, such as providing mood-aware or context-aware music recommendations (see Figure 3). For example, as demonstrated in Figure 3 (A) - the recommendations contain four types of music, i.e., upbeat pop music, relaxing instrumental music, positive and uplifting music, and music from the user’s favorite artist or genre - for the user to deal with loneliness. It also provides reasonable explanations.

Figure 3: Examples of mood-aware and context-aware recommendations.

Figure 3: Examples of mood-aware and context-aware recommendations.

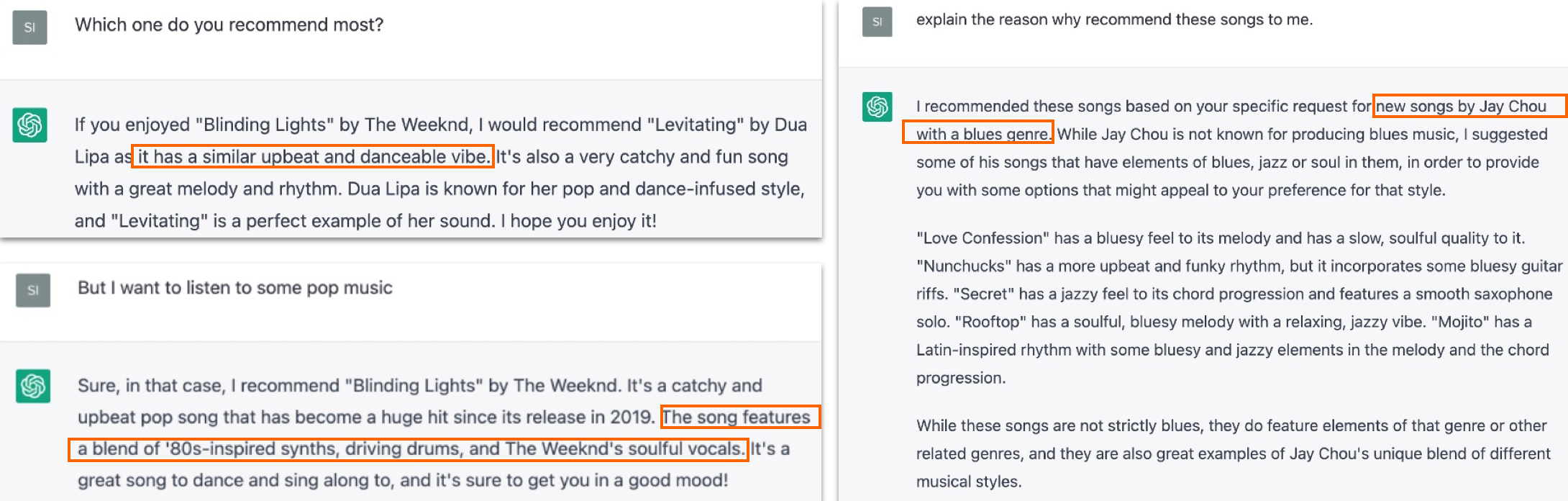

We notice that ChatGPT typically provides music recommendations with explanations. As shown in Figure 4, these explanations are generally knowledge-based, content-based, or case-based reasoning explanations. Additionally, users can proactively request an explanation for a recommendation, and ChatGPT can recall their preferences and provide a reasonable answer.

Figure 4: Explanation of the recommendation by ChatGPT.

Figure 4: Explanation of the recommendation by ChatGPT.

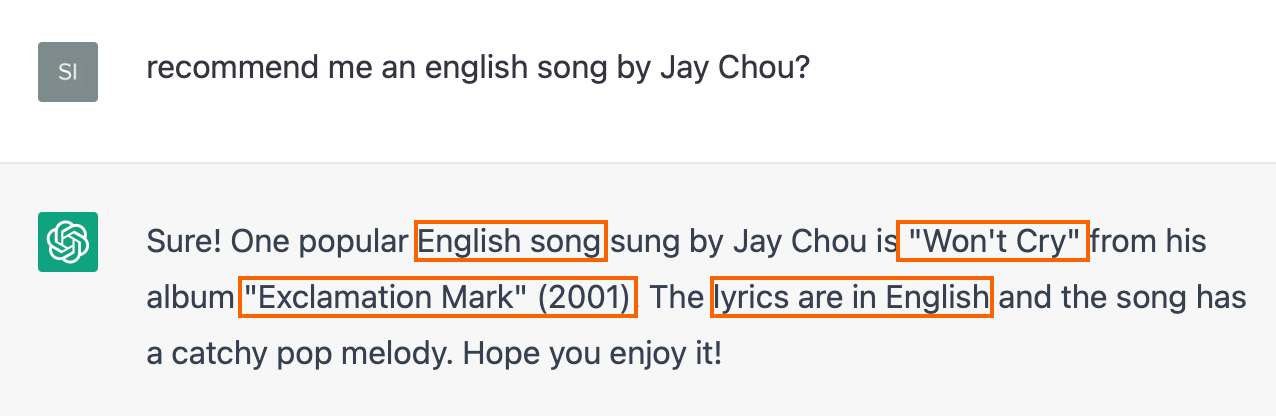

It is worth noting that ChatGPT may generate false and misleading text and fabricate some facts. For instance, when it was asked to recommend an English song by Jay Chou, the response (as shown in Figure 5) contains multiple errors. Although the recommended song and album are both from Jay Chou, "Won't Cry" is not an English song, and it is not from the album "Exclamation Mark". Furthermore, the release date for the album should be 2011, not 2001.

Figure 5: Example of falsehood generated by ChatGPT.

Figure 5: Example of falsehood generated by ChatGPT.

E-commerce Products: Smartphone Recommendation

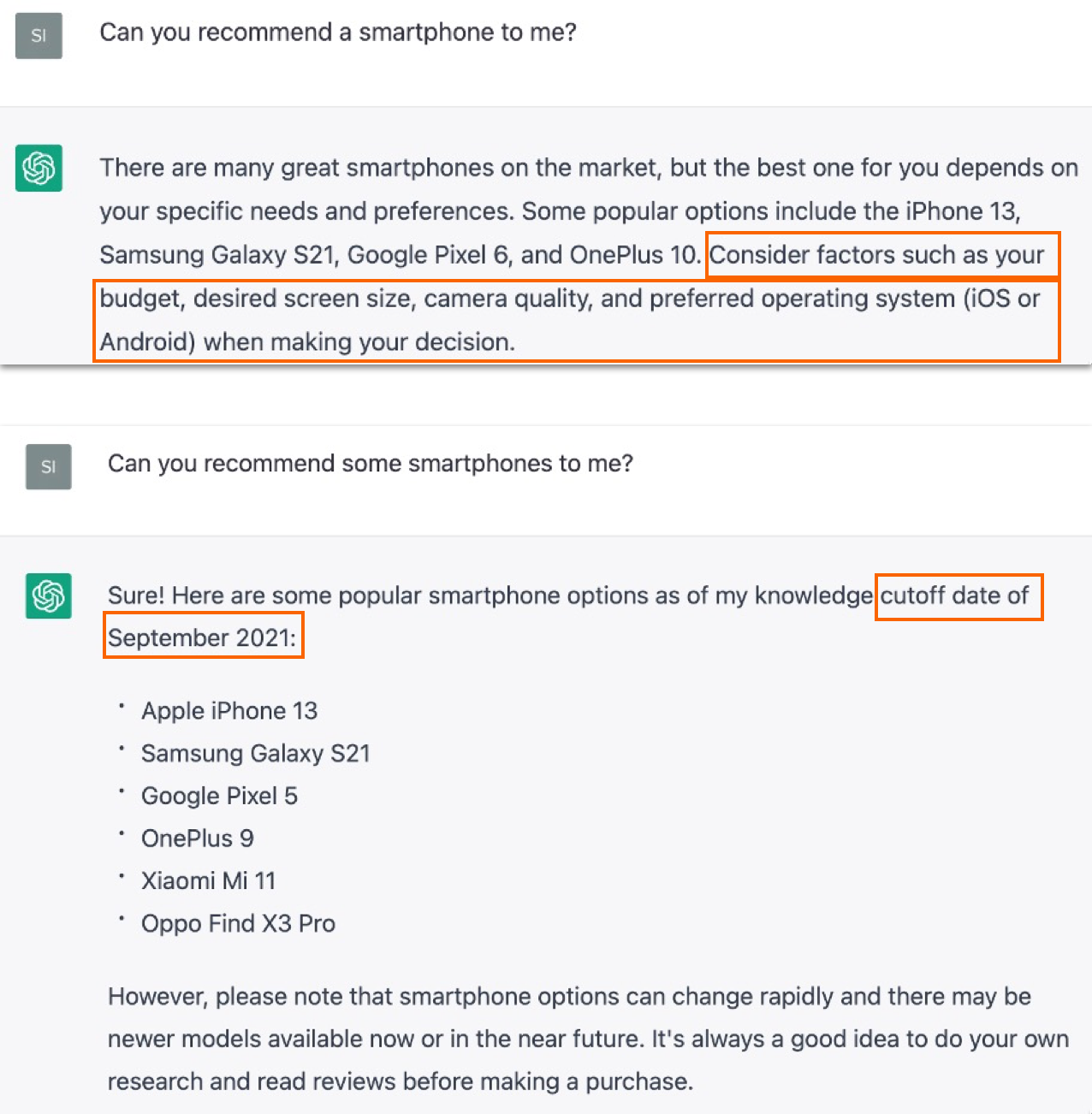

We also tested ChatGPT's performance in the smartphone recommendation task by asking: can you recommend a smartphone to me? The recommendations are mainly popularity-based, but have some suggestions on considering factors such as budget, desired screen size, camera quality, and preferred operating system, as illustrated in Figure 6. It should be noted that the recommended items may not be up-to-date, as ChatGPT’s knowledge cutoff date was mentioned to be September 2021.

Figure 6: The responses of ChatGPT when the user asks for a recommendation of a smartphone or recommendations of some smartphones.

Figure 6: The responses of ChatGPT when the user asks for a recommendation of a smartphone or recommendations of some smartphones.

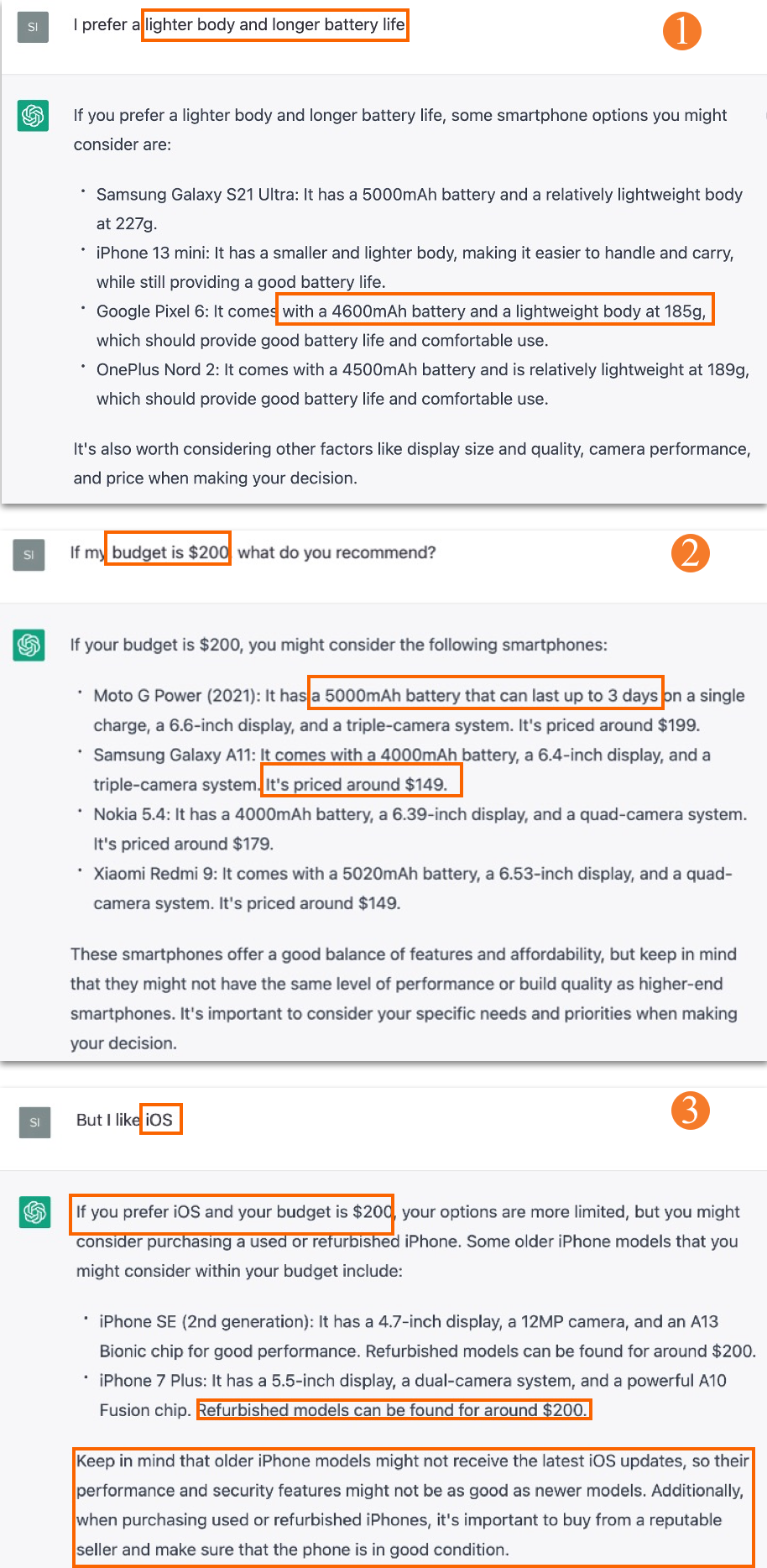

Users can continuously provide preference feedback in multi-turn conversations to get adaptive recommendations in response, and this is typically a critiquing-based conversational recommendation method [3] (Figure 7). The responses seem to be based on a fixed template, repeating the user’s specified needs first, providing recommendations in the middle, and then some neutral suggestions or reminders at the end. Some potential items, such as refurbished iPhones, were also recommended to satisfy some special needs of the user.

Figure 7: Example of system response when the user critiques the recommendation.

Figure 7: Example of system response when the user critiques the recommendation.

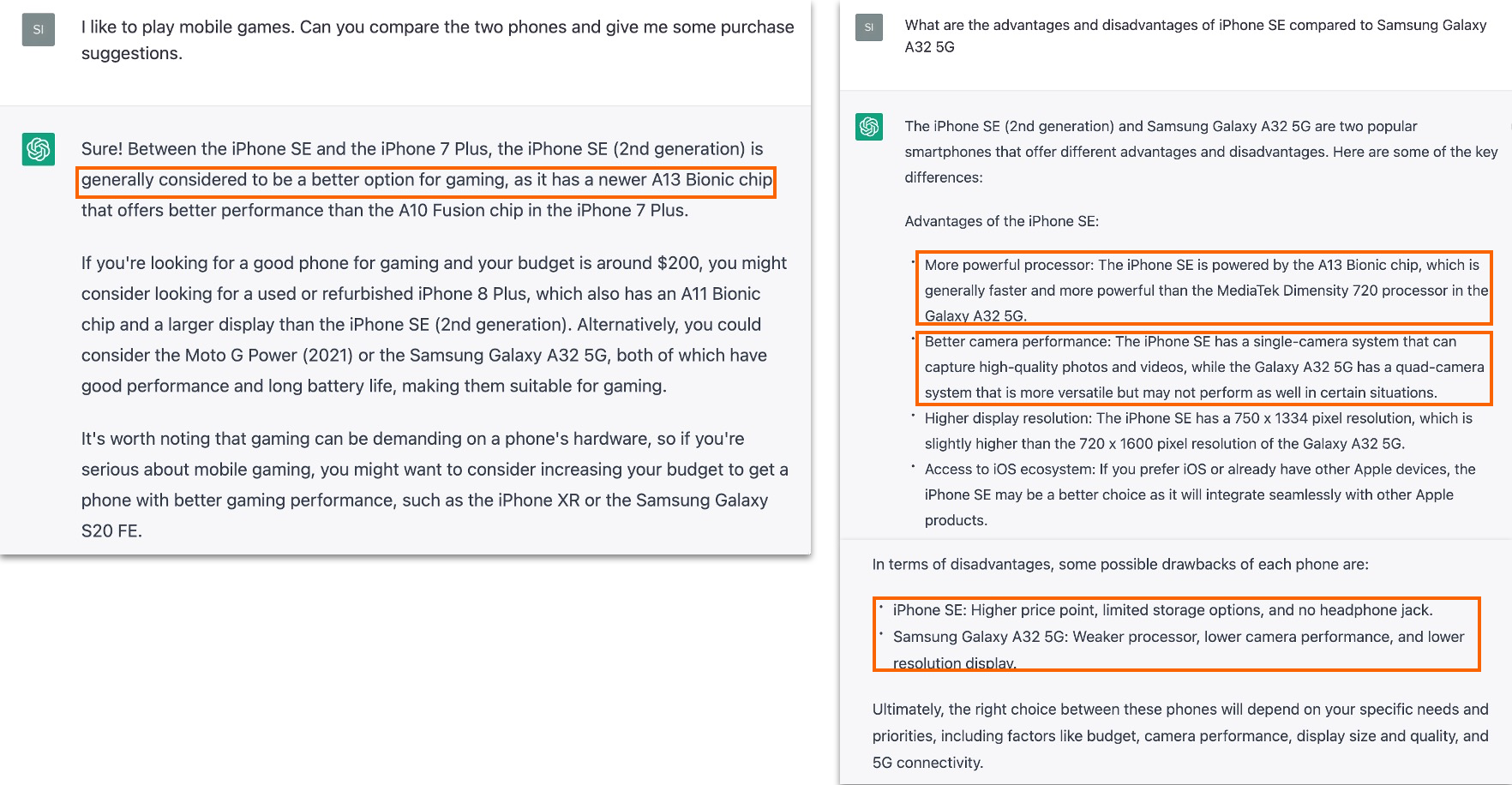

ChatGPT is capable of comparing different products, as shown in Figure 8. Such a comparison may be based on some specific attributes, such as processor speed and camera preference. However, such a response generally focuses on the advantages of a product and is less detailed in terms of its shortcomings. Although the recommended phones may not be the latest generation, we did not observe any major mistakes in the information provided about their attributes during our testing.

Figure 8: Example of system response when the user requests to compare different products.

Figure 8: Example of system response when the user requests to compare different products.

Service Products: Travel Recommendation

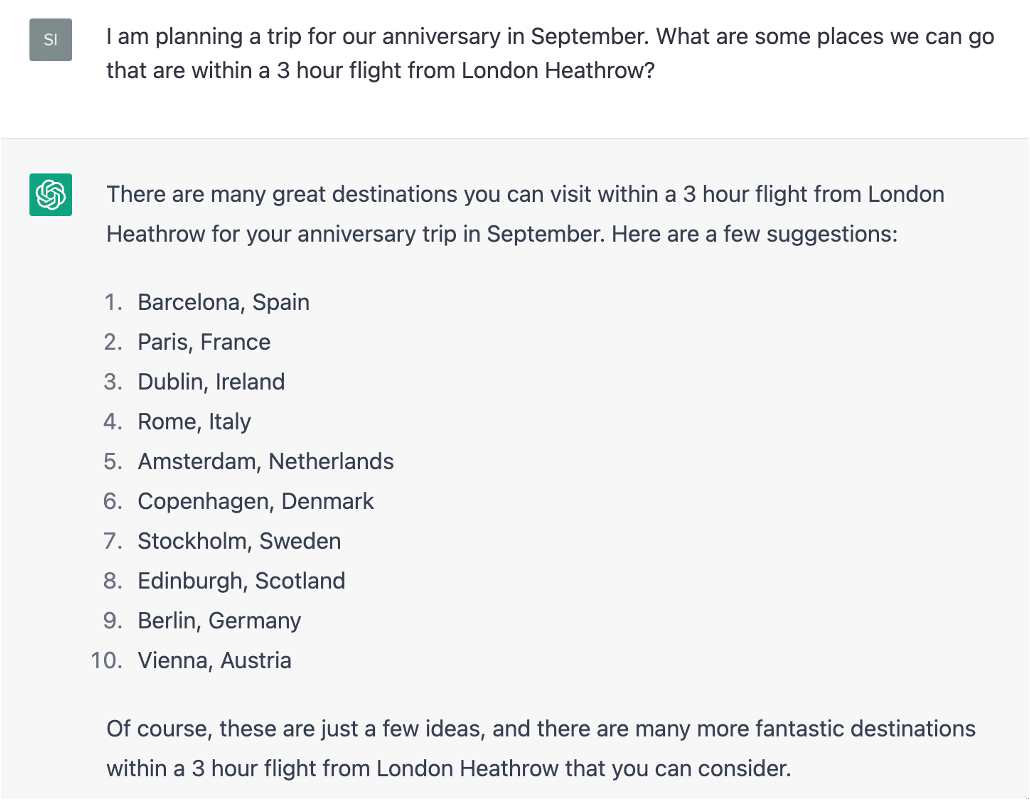

The last scenario is about the travel recommendation. We asked ChatGPT to recommend some travel destinations and make a personalized travel plan. Figure 9 shows an example of non-personalized destination recommendations.

Figure 9: The response of ChatGPT when the user asks for recommendations of some places.

Figure 9: The response of ChatGPT when the user asks for recommendations of some places.

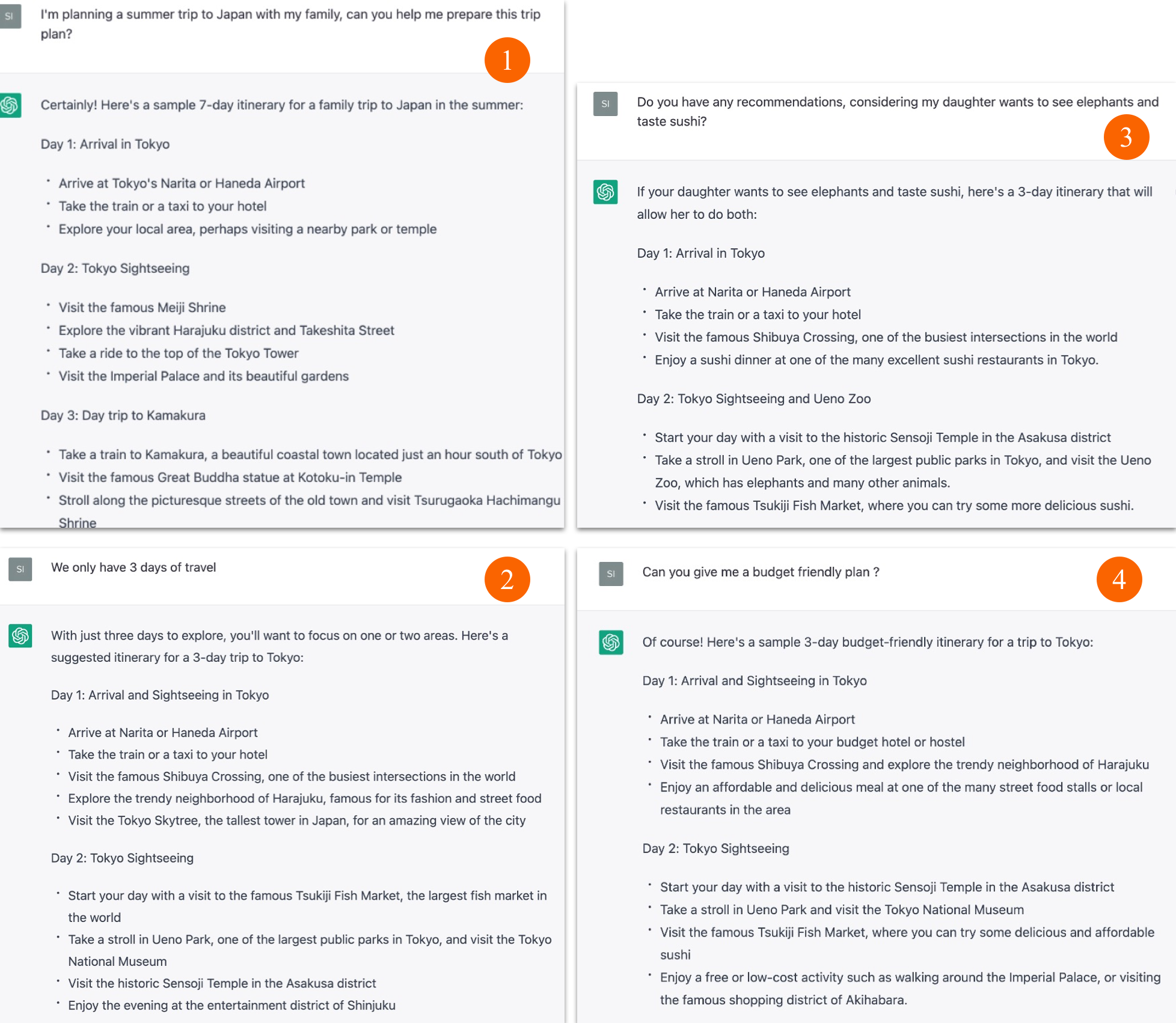

The capacity of ChatGPT to provide personalized recommendations is reflected in its travel planning. As shown in Figure 10, we asked ChatGPT to make a detailed travel plan for a family trip to Japan. The plan is adapted to the specific needs of the user in conversation. We did not observe some mistakes in its responses. However, ChatGPT seems to intentionally avoid mentioning certain paid services, such as specific hotels or restaurants in the travel plan.

Figure 10: The responses of ChatGPT when the user asks for a trip plan.

Figure 10: The responses of ChatGPT when the user asks for a trip plan.

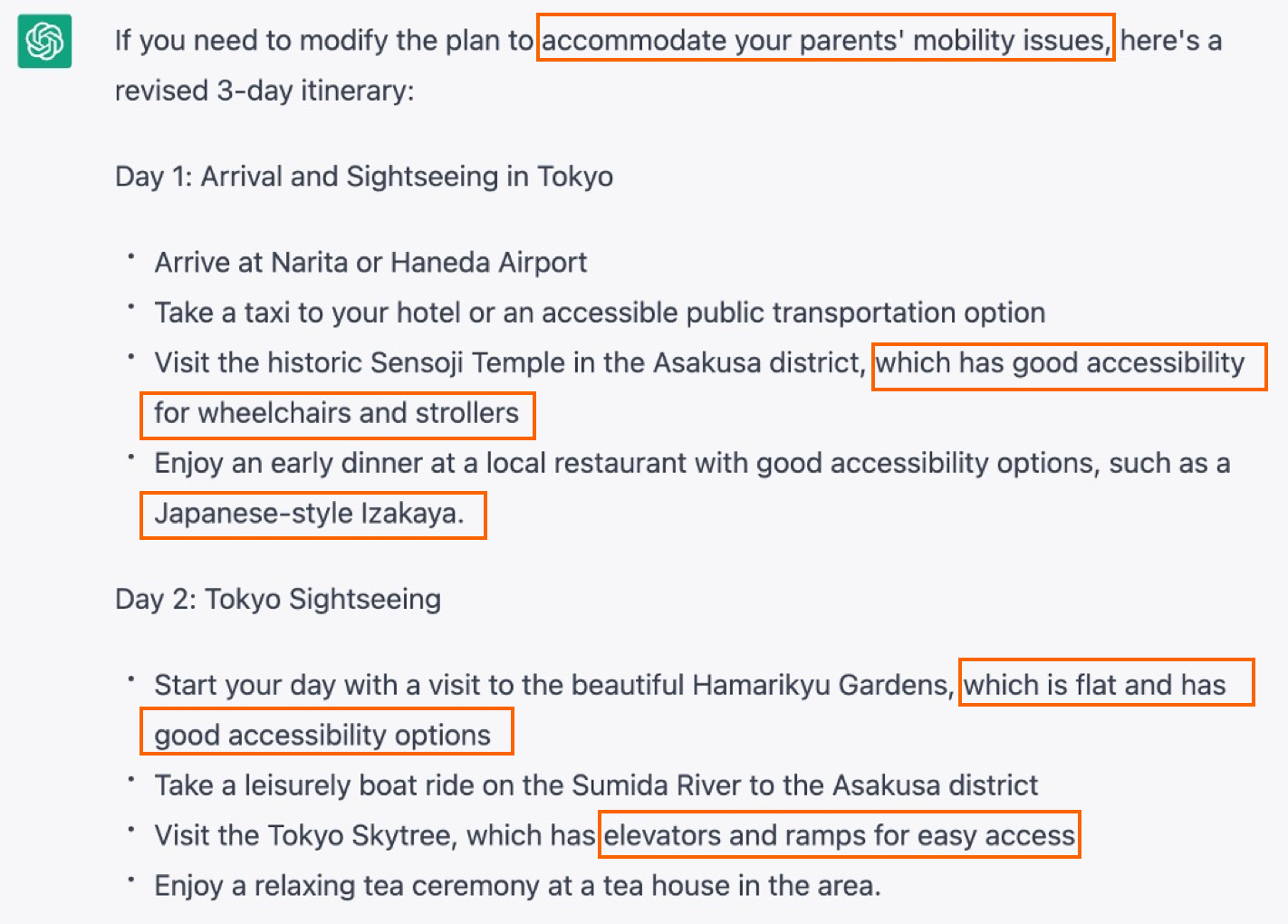

When the user has some special needs, ChatGPT can cater to them and justify the recommendation in the response. As shown in Figure 11, the accessibility mentioned in the recommendation of each site for justifying this choice looks appropriate.

Figure 11: Recommendation for special needs with justification.

Figure 11: Recommendation for special needs with justification.

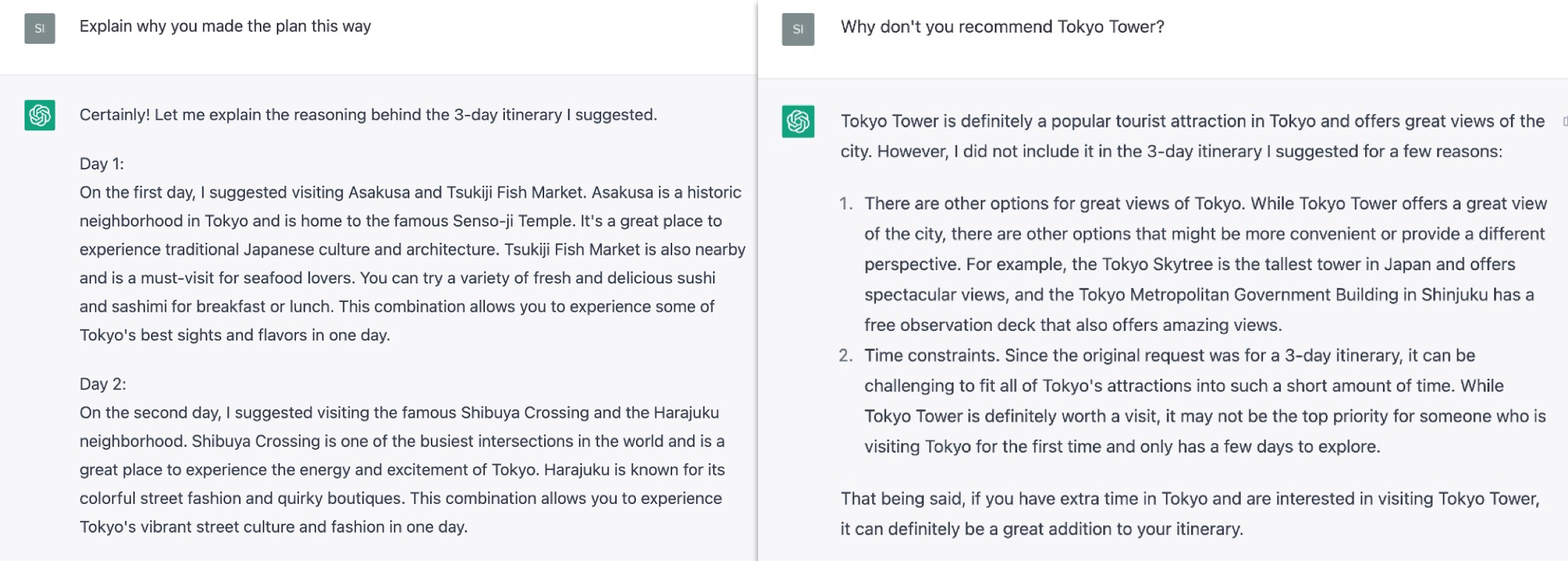

Besides, ChatGPT can provide reasonable explanations when the user asks, as shown in Figure 12. It can explain the reasons for selecting certain destinations or those for not selecting a particular destination.

Figure 12: Explanation of the reasons for selecting or not selecting a certain destination.

Figure 12: Explanation of the reasons for selecting or not selecting a certain destination.

Summary

It seems ChatGPT has the capability of acting as a human advisor and providing personalized recommendations with reasonable explanations and justifications. While ChatGPT is primarily designed as an open-domain chatbot, its performance in carrying out recommendation tasks can offer valuable insights for the development of task-oriented conversational recommender systems. However, at the current stage, it still has several issues or limitations.

Incorrect information

It may generate false and misleading text. Although the generated content seems reasonable, it often contains factual errors. For example, as shown in Figure 5, although the song and album are from the right artist, the song is not from that mentioned album, and the year of release is incorrect. Such inaccuracies may mislead users, especially those without domain knowledge who must take extra steps to verify the information. This can result in user distrust of the generated recommendations. This issue has also been observed in other domain-related tasks [4]. For instance, when using ChatGPT to generate paper summaries, some of the summaries may contain similar content from different papers [5], or contain factual errors, misrepresentations, and/or wrong data [6]. Therefore, identifying mistakes in the responses by generative AI models should deserve further exploration.

Prompts affect the response quality

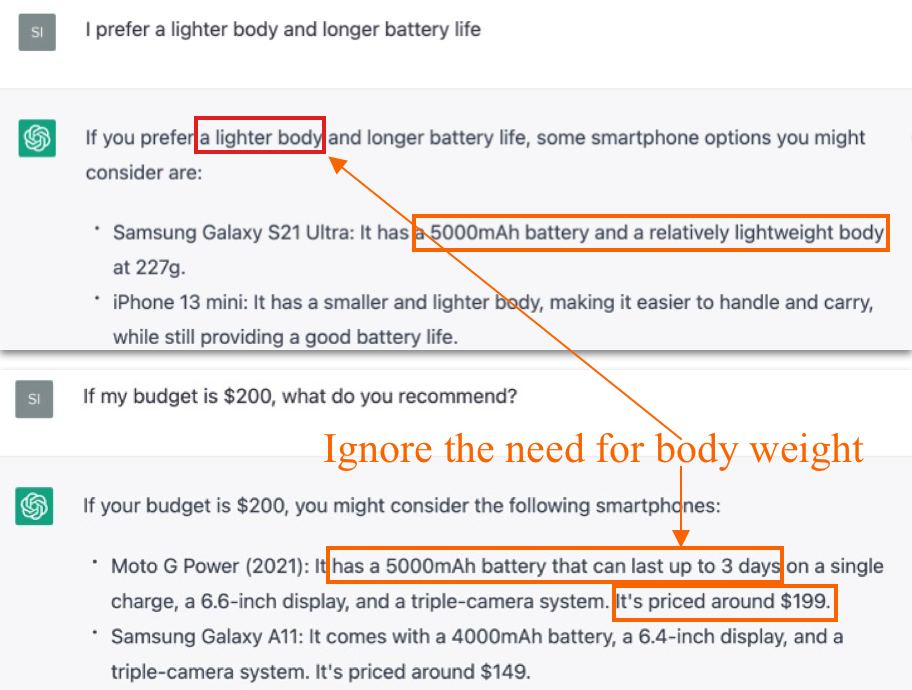

We observe that ChatGPT could “remember” the historical conversation within the same session and provide appropriate feedback based on context, enabling the system to generate more satisfying recommendations. However, we notice that some attributes’ needs, as mentioned by the user, are lost or ignored in the multi-turn conversation (e.g., “a lighter body” was ignored in the second round recommendation; see Figure 13).

Figure 13: Example that the user’s indicated preference was ignored in the following recommendations.

Figure 13: Example that the user’s indicated preference was ignored in the following recommendations.

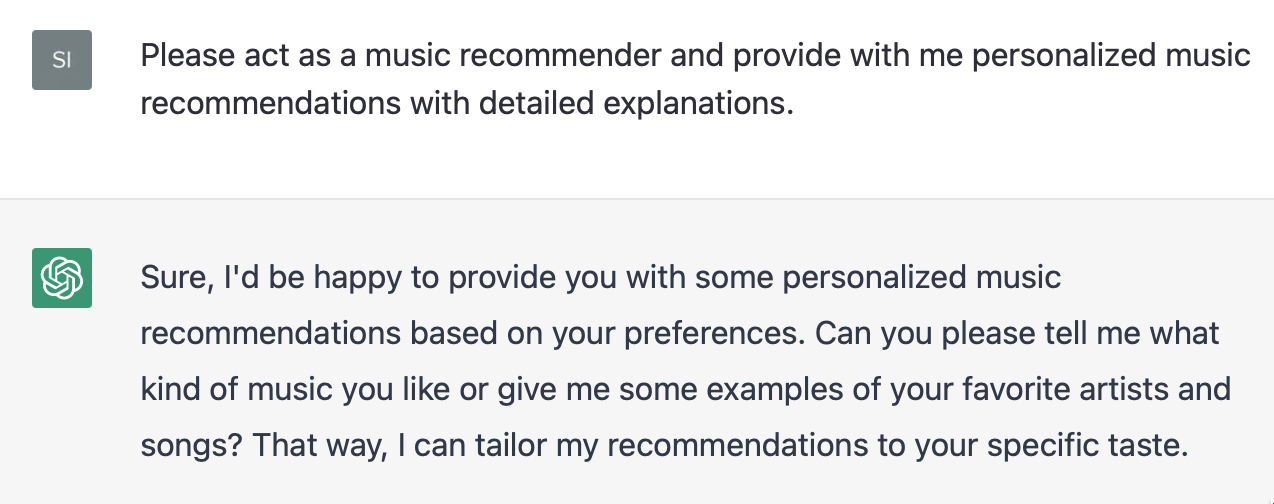

GhatGPT seems to have a better performance if the user asks it to behave as a personalized recommender (Figure 14). These user inputs are called prompts in the NLP field. We have also observed the effectiveness of several widely used prompts [7]. By assigning a specific role and providing detailed requirements at the beginning of the conversation, users might obtain the desired responses from ChatGPT. Based on these findings, it is worth investigating how to better communicate with ChatGPT in order to receive more personalized and accurate recommendations.

Figure 14: ChatGPT proactively asks the user for her preferences if it is asked to act as a music recommender to provide some personalized recommendations at the start.

Figure 14: ChatGPT proactively asks the user for her preferences if it is asked to act as a music recommender to provide some personalized recommendations at the start.

Outdated data

We tested ChatGPT on the January 2023 version, which is trained on data till September 2021 [8]. Therefore, the recommendations do not include recent items, such as recently released phones. This issue might be solved by allowing the model to search from the website in real-time to retrieve up-to-date information.

We believe ChatGPT has great potential for supporting recommendation tasks. However, it should be noted that ChatGPT remains a “black box”, and our preliminary tests have only revealed a few features in those three typical recommendation scenarios. Future studies could focus on how to better exploit its capabilities for developing responsible and reliable recommender tools. Additionally, it is important to consider how end-users can effectively use and communicate with it for obtaining accurate and satisfying recommendations.

References:

[1] https://cyprus-mail.com/2023/02/05/chatgpt-sets-record-for-fastest-growing-user-base/

[4] Zhou, J., Ke, P., Qiu, X., Huang, M., & Zhang, J. (n.d.). ChatGPT: potential, prospects, and imitations. Front Inform Technol Electron Eng (2023). https://doi.org/10.1631/FITEE.2300089

[5] Tlili, A., Shehata, B., Adarkwah, M. A., Bozkurt, A., Hickey, D. T., Huang, R., & Agyemang, B. (2023). What if the devil is my guardian angel: ChatGPT as a case study of using chatbots in education. Smart Learning Environments, 10 (1), 1-24.

[6] van Dis, E. A., Bollen, J., Zuidema, W., van Rooij, R., & Bockting, C. L. (2023). ChatGPT: five priorities for research. Nature, 614 (7947), 224-226.